UCLA Engineers Unveil Algorithm for Robotic Sensing and Movement

Advance may lead to improved performance in autonomous vehicles and satellite operations

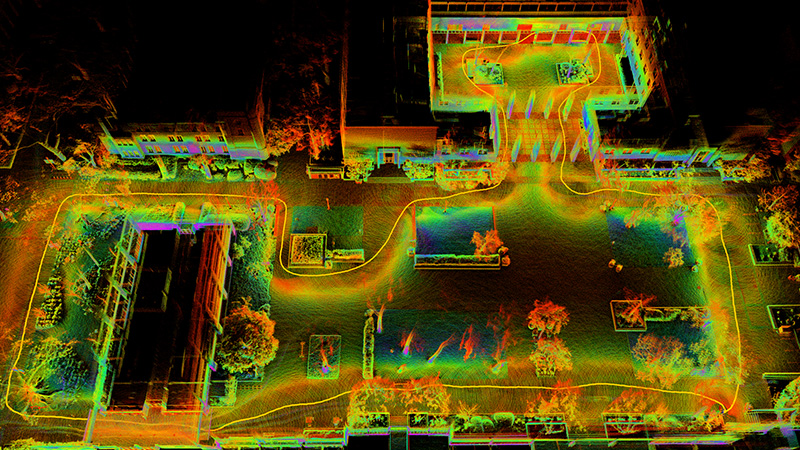

Image of the Court of Sciences at UCLA mapped by a quadcopter drone using new algorithms for improved speed and accuracy

A trio of UCLA engineers has developed a new computational procedure that allows autonomous robots to perceive, map and adjust to their environments more quickly and accurately — significantly improving the robots’ ability to navigate their surroundings in real time. The advancement could lead to life-saving applications for search-and-rescue robots and enhance current technology used in autonomous vehicles or planetary exploration.

Mobile robots rely on simultaneous localization and mapping in order to operate effectively and efficiently. In recent years, light-detection and ranging technology, or lidar, has allowed robots to visualize their operating environment (mapping) and understand how to navigate their dynamic surroundings (location) at the same time. However, there remained fundamental limitations on lidar-powered robots’ mapping accuracy and reaction time as they struggle with massive data processing and computational complexity.

Led by project investigator and assistant professor of mechanical and aerospace engineering Brett Lopez at the UCLA Samueli School of Engineering, the team developed a new algorithm to address the challenges. Built upon the current lidar sensing technology, direct lidar-inertial odometry and mapping, or DLIOM, is the focus of a UCLA study presented at the 2023 Institute of Electrical and Electronics Engineers (IEEE) International Conference on Robotics and Automation.

“The algorithm we developed produces precise geometric maps of nearly any environment in real time using a compact sensor and computing suite,” said study first author Kenny Chen, a recent UCLA electrical and computer engineering doctoral graduate. “It has several key advantages over other current solutions including faster computational speed, better map accuracy and improved operational reliability.”

Ryan Nemiroff, an electrical and computer engineering graduate student, is the third team member. Using a custom-built quadcopter drone, the researchers flew their DLIOM-powered unmanned aerial robot around UCLA’s Royce Hall and surrounding buildings in Dickson Plaza, the Franklin D. Murphy Sculpture Garden, the Court of Sciences and the Mildred E. Mathias Botanical Garden. The test flights found the new algorithm-embedded drone performed 20% faster and 12% more accurately than those armed with current state-of-the-art algorithms.

Image courtesy of the Verifiable and Control-Theoretic Robotics Laboratory at UCLA

A custom-built quadcopter drone powered by new algorithms developed by UCLA researchers

According to the researchers, DLIOM boasts several fundamental innovations designed to enhance a robot’s capabilities such as remembering previously visited locations, utilizing only relevant environmental information, adapting to changing surroundings, correcting blurry incoming imaging from its sensors and processing novel data. DLIOM also expedites the speed with which the autonomous robot operates by combining the data collection and process into a single step.

The new computational method requires little hands-on tuning for different environments, such as a thick forest or a sprawling suburb, thereby allowing the robot to explore various topographies on a single trip without human intervention. In essence, the algorithm enables the robot to process information so quickly that they can configure their journey as they go.

“When we send next-generation robots to explore other worlds, they will need to handle a range of environments and conditions, as well as establishing their own maps of their local surroundings,” said Lopez, who directs the Verifiable and Control-Theoretic Robotics Laboratory at UCLA. “Our new algorithm shows a great path forward to solving latency problems associated with mapping and localization challenges. More immediately, this could help enhance next-generation robots for safer self-driving vehicles, and for search-and-rescue and infrastructure-inspection efforts.”

In designing its algorithm, the team incorporated an inertial measurement unit (IMU) device that measures the gravity and angular rate of an object to which it is attached. By combining lidar scans with IMU, the researchers developed robots that show improved localization accuracy and mapping clarity.

The current version of DLIOM is an update of a 2022 iteration published in IEEE Robotics and Automation Letters and available through IEEE’s research database Xplore. It was developed by Chen, Lopez and their colleagues at UCLA and the NASA Jet Propulsion Laboratory for Team CoSTAR. The NASA team was one of the finalists in a subterranean robotic exploration challenge held in 2021 by the Defense Advanced Research Projects Agency.

Written from scratch by the three UCLA researchers in the programming language C++, DLIOM contains less than 3,000 lines of code, as opposed to a typical algorithm developed by a large team that consists of 5,000-10,000 lines. The design allows for minimal programming redundancy and computational complexity, while preserving flexibility for real-world robotics applications.

The authors are exploring the possibility of patenting the algorithm through the UCLA Technology Development Group for a software license.